Reducing MCP Token Usage by 100x: Optimization Strategies That Work

The Rise of MCP and the Hidden Cost of Token Consumption

The Model Context Protocol (MCP) is rapidly becoming the standard for building powerful AI agents that can interact with the digital world. By providing a structured way for large language models (LLMs) to use external tools and APIs, MCP unlocks a new realm of possibilities for automation and intelligent systems. However, as developers rush to embrace this new paradigm, many are encountering a significant and often unexpected hurdle: the high cost of token consumption.

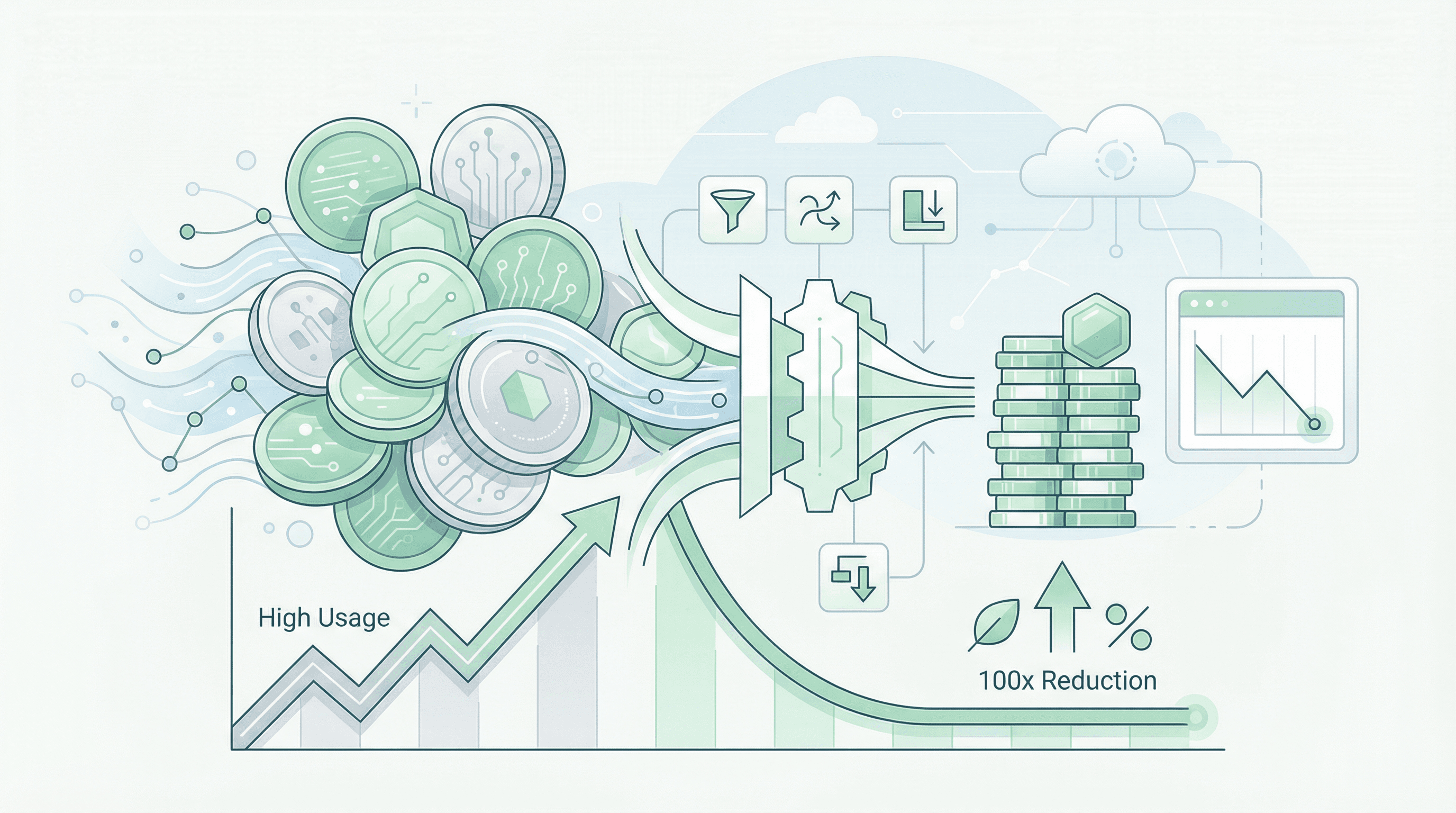

Every interaction with an LLM, whether it's a prompt, a tool definition, or an API response, consumes tokens. And as the complexity of our MCP servers grows, so does the number of tokens required to operate them. This "token bloat" can quickly lead to spiraling costs, making it difficult to build and scale MCP-powered applications economically. But what if you could reduce your token usage by 100x or even more? In this article, we'll explore two powerful, real-world strategies that can help you achieve just that: Dynamic Toolsets and GraphQL-powered MCP servers.

The Token Bloat Problem in Traditional MCP Implementations

To understand the solution, we first need to understand the problem. The most common approach to building an MCP server is to simply wrap an existing API, exposing each endpoint as a separate tool. While this method is quick and easy, it often leads to significant token inefficiency due to two main factors:

-

Verbose API Responses: Many APIs are designed to be comprehensive, returning large JSON payloads with nested data and metadata that may not be relevant to the LLM's current task. All of this extra data consumes valuable tokens.

-

Tool Proliferation: To combat verbose responses, developers often break down large APIs into smaller, more granular tools. However, this can lead to a proliferation of tools, which increases the complexity of the MCP server and the number of tokens required to define the toolset.

Imagine an MCP server that interacts with a user management API. A simple request for a user's name and email might trigger a getUser tool that returns the entire user object, including their profile, permissions, and usage statistics. This is a classic case of over-fetching, and it's a major source of token waste. For example, a single API call returning user data could look like this:

{

"user": {

"id": "12345",

"profile": {

"name": "John Doe",

"email": "john@example.com",

"preferences": { /* 50+ fields */ },

"metadata": { /* extensive tracking data */ }

},

"permissions": [ /* 20+ permission objects */ ],

"usage_stats": { /* detailed analytics */ }

}

}

When the AI model only needs the user’s name and email, we are still consuming tokens for the entire response payload — a classic case of over-fetching leading to token waste.

Dynamic Toolsets: The 100x Optimization

One of the most promising solutions to the token bloat problem is the concept of Dynamic Toolsets. Instead of providing the LLM with a static, exhaustive list of all available tools, a dynamic toolset intelligently filters and presents only the most relevant tools based on the user's prompt. This "just-in-time" approach to tool loading can dramatically reduce the number of tokens required for each interaction.

In a recent blog post, Speakeasy demonstrated a refined Dynamic Toolset implementation that achieved up to a 160x token reduction compared to static toolsets [1]. Their approach combines progressive search and semantic search to provide the best of both worlds: the discoverability of a large toolset with the efficiency of a small one.

Here's how it works:

-

Context Awareness: The LLM is initially given a high-level overview of the available tool categories, allowing it to understand the server's capabilities without being overwhelmed by a long list of tools.

-

Natural Language Discovery: The LLM can then use natural language to search for the specific tools it needs. For example, it might ask to "find tools for managing customer deals."

-

Just-in-Time Loading: Only the tools and schemas that are actually needed for the task are loaded into the context window, keeping it lean and efficient.

This approach not only reduces token consumption but also makes it possible to build MCP servers with hundreds or even thousands of tools without overwhelming the LLM.

A Deeper Dive into Dynamic Toolsets

The core idea behind Dynamic Toolsets is to treat your tool definitions like a searchable database. Instead of sending the entire tool schema with every request, you send a much smaller, high-level description of the available tools. The LLM can then use a dedicated search_tools tool to find the specific tools it needs for a given task. This creates a multi-step process that looks something like this:

- Initial Prompt: The user provides a prompt, such as "Find the latest invoice for customer ACME Corp and email it to them."

- Tool Search: The LLM, having been provided with a

search_toolstool, uses it to find relevant tools. It might make a call likesearch_tools("find invoice")andsearch_tools("send email"). - Tool Loading: The MCP server responds with the full definitions for the

find_invoiceandsend_emailtools. - Tool Execution: The LLM can now use the loaded tools to complete the task.

This approach has several advantages:

- Massive Token Savings: The initial prompt is much smaller, as it doesn't contain the full tool definitions.

- Scalability: You can have a massive number of tools available without overwhelming the LLM's context window.

- Discoverability: The LLM can discover new tools and capabilities at runtime.

However, there are also some potential disadvantages to consider:

- Increased Latency: The multi-step process of searching for and then executing tools can add latency to the interaction.

- Increased Complexity: Implementing a Dynamic Toolset requires more complex server-side logic.

GraphQL: The 80% Optimization for Data-Heavy APIs

For data-heavy APIs, another powerful optimization strategy is to use GraphQL. GraphQL is a query language for APIs that allows clients to request exactly the data they need, and nothing more. By placing a GraphQL layer between your MCP server and your existing APIs, you can eliminate over-fetching and dramatically reduce token usage.

In a recent article, Mukundan Kidambi described how his team achieved a 70-80% reduction in token usage by consolidating their entire data layer into just two GraphQL-powered MCP tools [2]:

-

get_graphql_schema: This tool allows the LLM to introspect the GraphQL schema and understand the available data and operations. -

execute_graphql_query: This tool allows the LLM to execute GraphQL queries and receive precisely the data it requested.

Here's a simplified Python example of what a GraphQL-powered MCP server might look like:

from flask import Flask, request, jsonify

import graphene

# Define your GraphQL schema

class Query(graphene.ObjectType):

hello = graphene.String(name=graphene.String(default_value="stranger"))

def resolve_hello(self, info, name):

return f"Hello {name}"

schema = graphene.Schema(query=Query)

# Create your Flask app

app = Flask(__name__)

@app.route("/mcp", methods=['POST'])

def mcp_server():

data = request.get_json()

tool_name = data.get("tool_name")

if tool_name == "get_graphql_schema":

# Return the GraphQL schema

return jsonify({"schema": str(schema)})

elif tool_name == "execute_graphql_query":

# Execute the GraphQL query

query = data.get("query")

result = schema.execute(query)

return jsonify(result.data)

else:

return jsonify({"error": "Invalid tool name"}), 400

if __name__ == "__main__":

app.run(debug=True)

This approach not only reduces token consumption but also simplifies the MCP server by reducing the number of tools from potentially dozens to just two.

Implementation Considerations for GraphQL

While the benefits of using GraphQL for MCP are clear, there are some implementation challenges to consider:

- Schema Generation: If you have a large number of existing APIs, manually creating a GraphQL schema can be a daunting task. Fortunately, there are tools available that can automate this process by introspecting your existing OpenAPI or Swagger specifications.

- Authorization: It's crucial to ensure that your existing authorization logic is preserved when you introduce a GraphQL layer. This can be achieved by passing the user's context from the MCP session to the GraphQL executor.

Choosing the Right Strategy

So, which optimization strategy is right for you? The answer depends on your specific use case.

-

Dynamic Toolsets are a great choice if you have a large and diverse set of tools and you want to maximize token savings and scalability.

-

GraphQL is an excellent option if you have data-heavy APIs and you want to eliminate over-fetching and simplify your MCP server.

It's also worth noting that these two strategies are not mutually exclusive. You could, for example, use a Dynamic Toolset to allow the LLM to discover a graphql tool, which it could then use to query your data layer.

Other Optimization Strategies

While Dynamic Toolsets and GraphQL are two of the most powerful optimization strategies, there are other techniques you can use to further reduce your token consumption:

-

Semantic Caching: Cache the results of similar queries to avoid redundant API calls and token usage. This can be particularly effective for frequently accessed data.

-

Response Compression: Compress large API responses before sending them to the LLM. This can be as simple as using gzip to compress the JSON payload.

-

Intelligent Tool Design: Design your tools to be as specific and efficient as possible, avoiding unnecessary parameters and responses. For example, instead of having a single

update_usertool that can update any field, you might have separateupdate_user_emailandupdate_user_nametools.

Conclusion

The high cost of token consumption is a significant challenge for developers building MCP-powered applications. However, by embracing innovative strategies like Dynamic Toolsets and GraphQL, it's possible to dramatically reduce your token usage and build scalable, cost-effective AI agents. As the MCP ecosystem continues to evolve, we can expect to see even more powerful optimization techniques emerge, further democratizing the development of intelligent systems.

References

[1] Reducing MCP token usage by 100x — you don't need code mode | Speakeasy